*Read article on this : https://serverlessfirst.com/testing-tradeoff-triangle/*

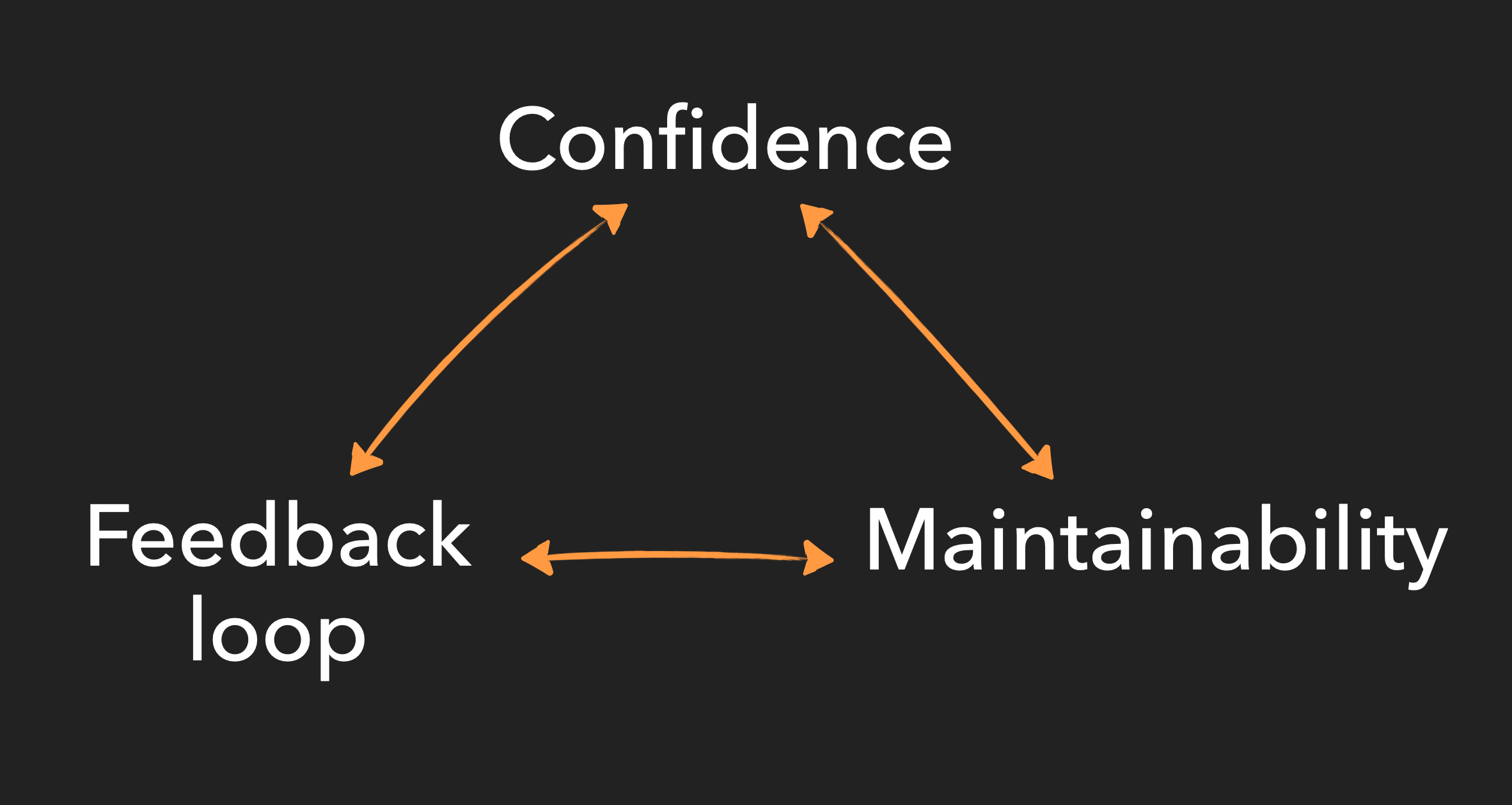

There are 3 core properties to consider when designing [[The purpose of testing software systems|an effective automated test suite]]:

- **Confidence**: [[An effective automated test suite should provide confidence that the system behaves as expected]]

- **Feedback loop**: [[An effective automated test suite should deliver feedback that is fast, accurate and reliable]]

- **Maintainability**: [[An effective automated test suite should make maintenance easier]]

## The tension between each objective

These objectives aren't boolean but are instead on a sliding scale—a test needs to have "enough" of each level to be of net positive value and therefore be worth keeping (or writing in the first place).

Secondly, and crucially, each objective is often in conflict with at least one of the other two.

This is what I call the **testing trade-off triangle**.

The general principle is that when deciding upon your testing approach for a new system (or a new feature/change to an existing system), **you need to make a compromise between confidence level, feedback loop and maintainability**.

I believe this trade-off holds true for all categories of software development projects, not just serverless systems.

---

## References

- [The Pains of Testing Serverless Applications](https://serverlessfirst.com/pains-testing-serverless/) by Paul Swail

- [Serverless, Testing and two Thinking Hats](https://blog.symphonia.io/posts/2020-08-19_serverless_testing) by Mike Roberts

- [Test pyramid](https://martinfowler.com/bliki/TestPyramid.html) by Martin Fowler

- [Test honeycomb for testing microservices](https://engineering.atspotify.com/2018/01/11/testing-of-microservices/) by Andre Schaffer & Rickard Dybeck (Spotify Engineering)